Do you know that a Perceptron is considered one of the simplest artificial neural networks, but why? A perceptron is a supervised machine learning algorithm used frequently in business intelligence.

A perceptron is generally counted as a basic or fundamental topic of machine learning or deep learning. It is an artificial neuron discovered by Frank Rosenblatt in 1957. Here, we will learn more about the Perceptron in detail.

What Is a Perceptron?

A perceptron is an artificial neuron, also considered a basic unit in a neural network. If you are about to follow a learning roadmap for machine learning or artificial intelligence, then a perceptron can be the perfect beginning point.

- A Perceptron is the basic building block of neural networks, based on how the human brain works.

- It is based on binary classification, where, based on several inputs, the model delivers an output.

- This artificial neuron is an algorithm for supervised learning consisting of a binary linear classifier of a single layer or a multi-layer.

- The first Perceptron was made to take a number of binary inputs and produce a binary output, either 0 or 1.

- The original identity of a perceptron is to use different weights to represent the importance of each input.

- The perceptron model can only represent a relationship in one single straight line line which makes it incapable of taking multiple decisions or solving non-linear patterns, like XOR.

- Activation function in this unit neural network uses the STEP rule to check whether the weight function is greater than 0 or not.

- A linear decision boundary represents the distinction between the linearly separable classes -1 and +1.

- When the sum of the input signals exceeds a certain defined threshold, it gives a signal in output; otherwise, it does not give any output.

There is one condition in a perceptron that must be followed, which is that the sum of values taken as an input must be greater than the threshold value before reaching a decision of either yes or no.

Read More: Top Python Interview Topics: A Beginner’s Guide For Students [Latest 2025]

The History of Perceptron

The history of the Perceptron begins in the late 1950s, when psychologist Frank Rosenblatt introduced it as one of the earliest models of artificial neural networks. Moving ahead with this discovery led to knowing the resemblance to the functioning of biological neurons. Rosenblatt designed this neural network to mimic and resemble how the human brain processes information and learns from experience.

His work marked a groundbreaking moment in the field of artificial intelligence, as it provided the first computational model capable of learning from data through weight adjustments. Rosenblatt’s Perceptron received significant attention after the U.S.

Navy funded the development of the Mark I Perceptron machine, a hardware implementation capable of recognizing simple visual patterns. This machine demonstrated how a neural network could adapt and make decisions based on input signals, giving many researchers confidence that intelligent machines could be built.

During this period, the Perceptron was widely celebrated, and Rosenblatt predicted that future networks could eventually learn complex tasks like speech recognition and language translation. It became the foundation of neural networks, powering AI systems across various domains.

Why is Perceptron Used In Machine Learning?

A perceptron is a neural network unit based on a supervised machine learning algorithm having four major parameters, including bias, weights, input, total sum, and more. It is essential because it allows systems to interpret and understand the world the way humans do. Just like our brain processes various elements like sights, sounds, and patterns to make sense of what lies in our surroundings. In the same way, machine learning models make use of a perceptron to analyze raw data like images, text, speech, or sensor inputs and convert it into meaningful insights.

Without a perceptron, machines would only see data as numbers without context, making them unable to recognize objects, understand language, detect emotions, or make informed decisions. In real-world scenarios, the concept of a perceptron is used in self-driving cars, helping them identify the road signs and other objects on roads. A neural network like this marks the starting point of making artificial intelligence more effective and impactful.

Read More: Data Science vs Machine Learning and Artificial Intelligence: Complete Explanation!

Characteristics of Perceptron Machine Learning

There are several points that define the concept of a perceptron in machine learning.

1. Input Processing

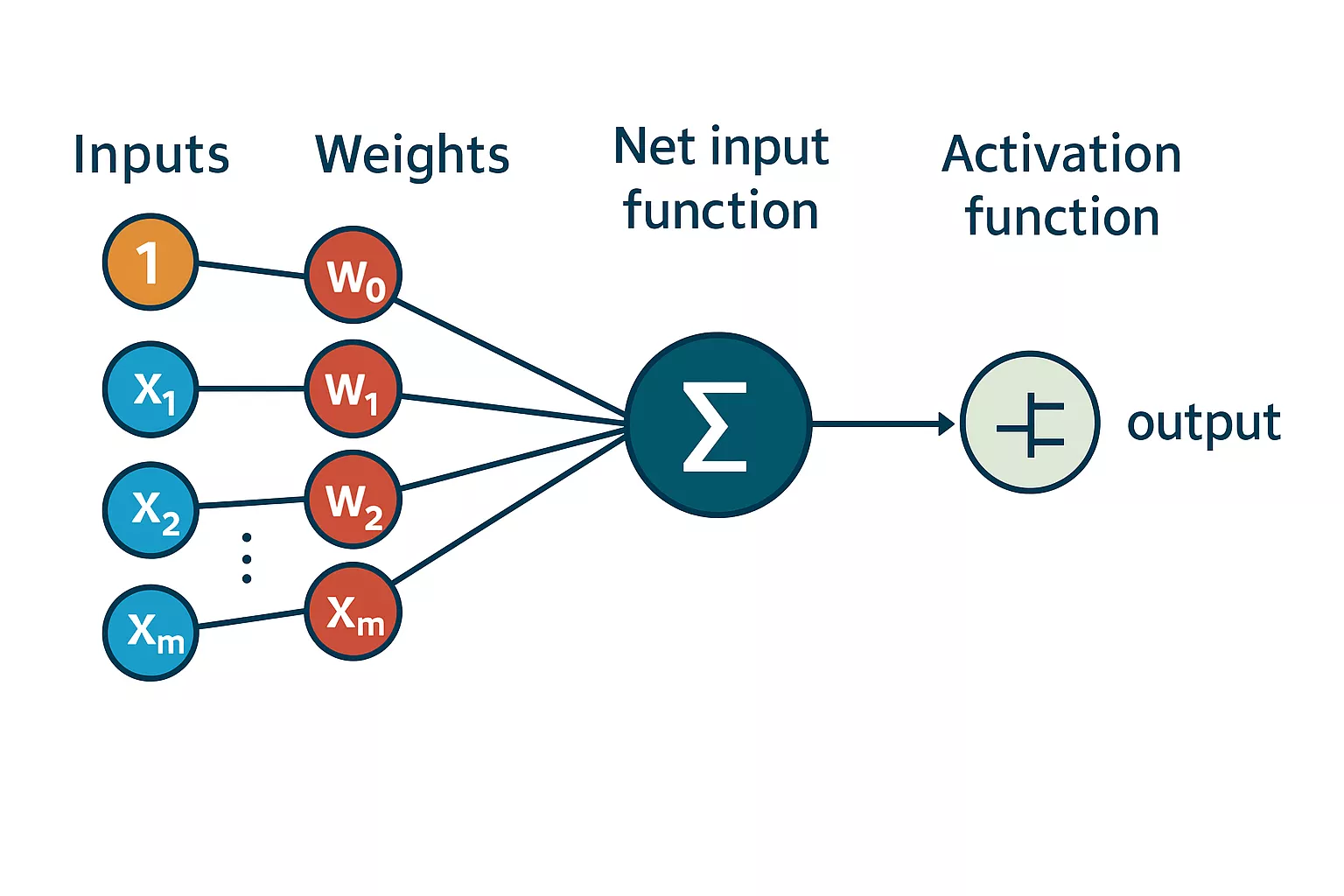

This model takes input in various forms and then multiplies each by its corresponding weight and adds them all together. These weights represent the importance of each feature, including helping perceptron understand which feature is more important for making a particular decision.

2. Binary Linear Classifier

The perceptron model in machine learning is used especially for binary classification, which means it decides outcomes taking into consideration two possible outcomes, either yes or no. It does this by drawing a straight line, also known as a hyperplane, in the feature space to separate data into two groups.

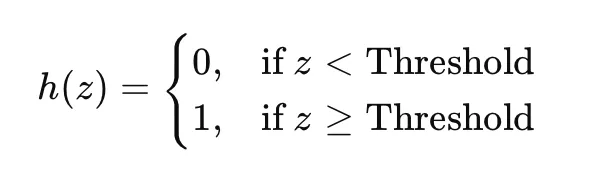

3. Activation Function

When weight inputs are combined, the perceptron model applies an activation function used mainly as a simple step function. This function checks whether the combined value is above or below a certain threshold. If it is above, the output is classified as one class, and if below, it belongs to the other class.

4. Training Process

During training, this neural network makes up its learning by comparing predictions to actual labels. When the result is found to be wrongly predicted, it adjusts the weights slightly in the correct direction, so that it reduces the chances of error in the next run.

This process helps the model improve over time and eventually find a decision boundary that best separates the two classes.

5. Single-Layer Model

The Perceptron artificial neuron consists of just one layer of computation i,e. The input layer is directly connected to the output. There are no hidden layers in between like the other complex models. This simple structure makes it easy to understand and interpret. But it also limits what it can learn from various features.

6. Limitations

A perceptron draws only straight decision boundaries; it fails with data input that is not linearly separable. For example, when we talk about the XOR problem. This kept the foundation of multi-layer neural networks, which can easily interpret and learn from non-linear relationships, unlike a perceptron.

Read More: Is Entrepreneurship Fuelled by Technical Skills the Hottest Trend to Look Out in 2025

The Perceptron Formula

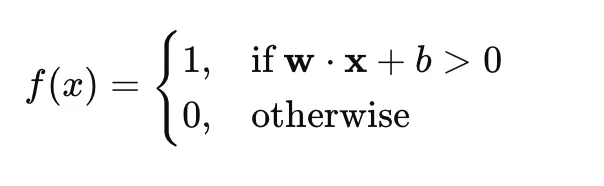

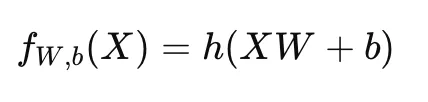

A perceptron is a function that maps input “x” when multiplied by the weight coefficient (w), giving an output value f(x), which is generated.

|

Here,

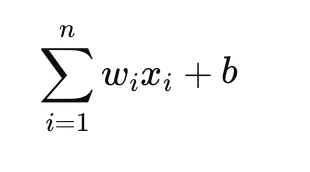

- w is the vector of real-valued weights

- b is the bias term

- x is the vector of input values

Here, m represents the number of inputs in the perceptron.

The Components of the Perceptron Neural Network

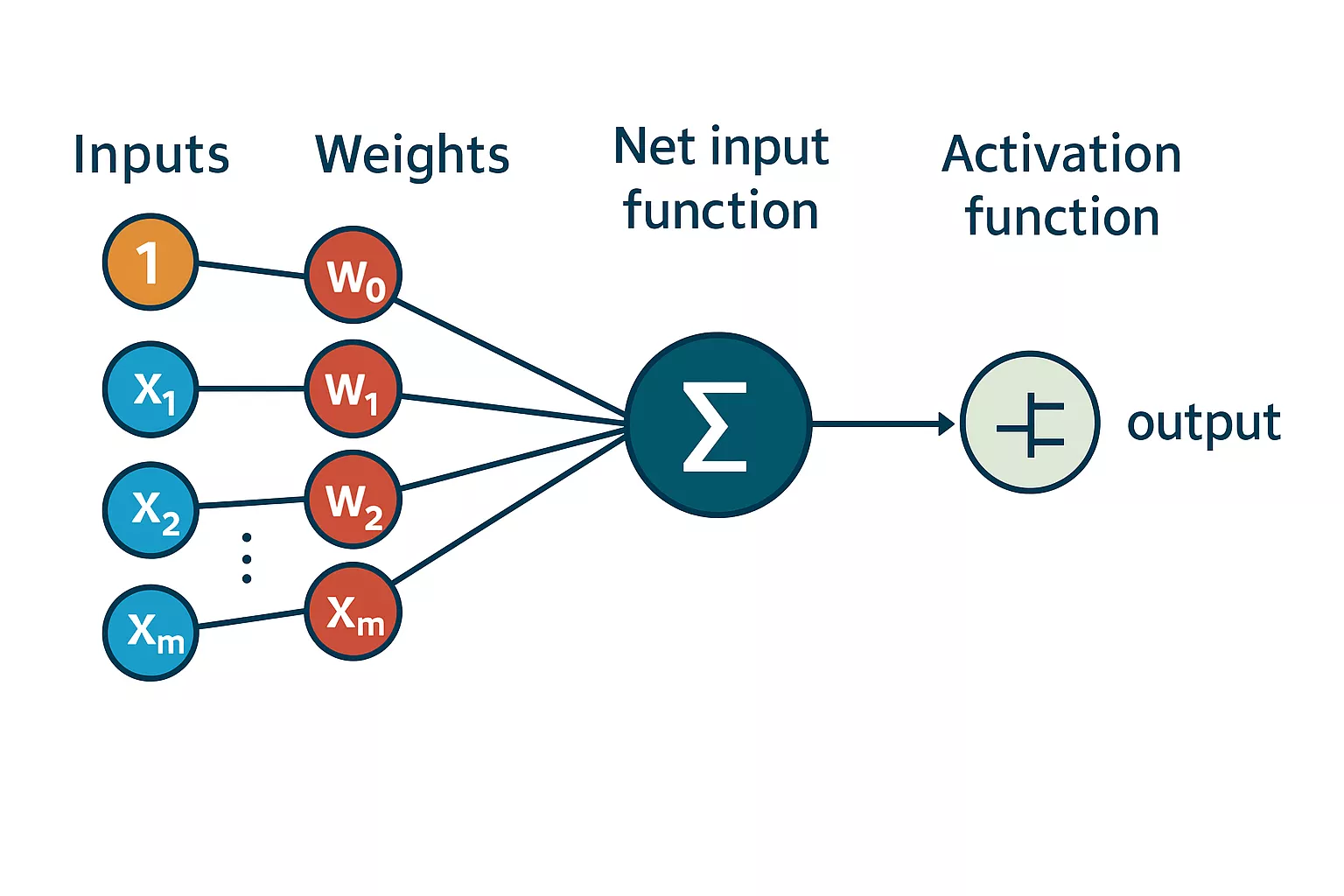

The Perceptron neural network model was invented by Frank Rosenblatt, consisting of several important components that make up the complete environment.

-

- Input features: The perceptron model makes use of multiple input features, each representing some characteristic of the input data given. These inputs are also known as nodes, consisting of weights and values.

- Node Weights (w): Each input feature, when fed into the perceptron, is assigned a weight that signifies the strength of each node and is used to determine the effect of each node on the output. These weights get adjusted during the training to find the optimal values.

- Node Values: The input values are generally taken in binary form i,e. Either 0 or 1. It can either result in yes or no/ true or false.

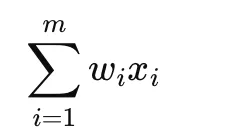

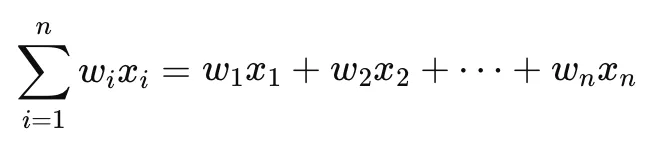

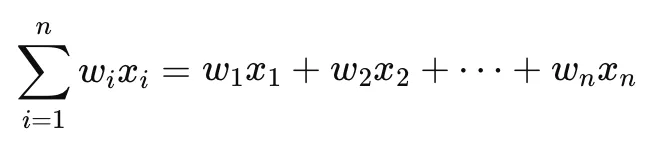

- Summation Function: The overall result of the weighted sum of inputs, combined with the respective weights, gives the summation used in the perceptron. It multiples each input by its corresponding weight and sums up the results.

- The threshold: This component signifies the value required for the perceptron to activate i,e. 1. Without this, it remains inactive.

- Activation Function: The summation or the weighted sum is kept in the activation function compared to a threshold to generate a binary output (either 0 or 1).

- Output: The output is decided or given by the activation function.

- Learning algorithm: The perceptron also adjusts its weights using a learning algorithm to minimize the prediction errors moving ahead.

Perceptron Learning Algorithm

Let us understand the this artificial neural network learning algorithm step by step in detail.

- First, all inputs are multiplied by their corresponding weights and then added to the weighted sum. (IMG: WEIGHTED SUM WITHOUT BIAS)

|

- When you take into consideration the bias i,.e b, then the representation becomes the following.

|

- The best compressed form of this entire mathematical representation is ( w.x + b )

Pseudocode

| Input: training set {(x_i, y_i)}, learning rate η, max_epochs

Initialize w ← 0 (or a small random) for epoch in 1..max_epochs: any_update = False for each (x, y) in training set: s = w · x y_hat = sign(s) if y_hat != y: w = w + η * y * x any_update = True if not any_update: break return w |

Let us break each point in the given pseudocode to understand the complete algorithm.

-

- Data Preparation: Here, the data converts labels to y ∈ {+1,−1}.

- Initialize Parameters: It’s time to initialize the weight vector w, using zeros or small random values. A learning rate can be selected during this stage, η>0.

- Repeat with Training Set: When taking a training input, say (x,y), then

| Calculate the activation (score), say s = w.x

Predict label: y^=sign(s), where sign(s)=+1 if s≥0, else −1. If correctly classified (y^=y) → do nothing. If misclassified (y^≠y) → update weights: w←w+η y x This moves the decision boundary so the example is more likely to be classified correctly next time. |

- The model stops when there are no weight updates used to complete an epoch. This generally signifies total separation.

- It also stops after a fixed number of epochs or when the validation performance stops showing improvement.

- The decision is made with the final weight vector (w), defining the decision rule.

How Does a Perceptron Work?

In a perceptron, weights are assigned to each input node. These weights represent the importance of that input in determining the output. The output for the perceptron is calculated by taking the weighted sum of the inputs. These results are then passed through an activation function, where this model decides the final output.

The step function then compares the weighted sum to the threshold. When the input is found to be larger than the threshold value, the output becomes 1; otherwise, it is 0. The most frequently used activation function in a perceptron is the Heaviside Function.

This unit artficial neural network consists of Threshold Logic Units (TLU), with each connected to all input nodes.

|

When all input nodes are connected to the TLU, also known as a dense layer, where all neurons are connected to every neuron in the previous layer. The output of the fully connected layer is computed as follows.

|

When the perceptron trains, its weights are adjusted to minimize the difference between the predicted output and actual output. This can be achieved using the supervised machine learning algorithm.

Limitations of the Perceptron Neural Network

There are some limitations of the Perceptron Neural Network, which is also one of the reasons this is one of the simplest artificial neural networks.

- Perceptron can only deliver classification as a binary number i,e. 0 or 1.

- It can only be used to classify the linearly separable sets of input vectors.

- It faces difficulty in learning complex patterns with its hierarchical feature representations.

- It faces difficulty in learning rate fluctuation based on how large or small the learning rate is, which can result in an unstable or slow learning curve.

- This model faces difficulties in handling sensitive data, and hence the decision boundary might become unstable when there are outliers, noise or overlapping classes.

Learn Perceptron With PW Skills

Become a certified data scientist with PW Skills Data Science Course. This course is for anyone who wants to master data science with the productivity of Generative AI. Harness the power of machine learning, generative AI, NLP, and more with our interactive tutorials and latest industry-relevant skills within this course.

Perks of this Data Science Course

- Get industry professional-led sessions from qualified industry professionals.

- Get industry-led professional live sessions and recorded tutorials.

- Start building a job-ready portfolio with a dynamic project portfolio.

- Get career assistance for interview preparation with guidance and opportunities to showcase your skills.

- Fast-track your upskilling journey with industry-level skills and personalised guidance.

- Get your certificate upon course completion to showcase your capabilities.

Perceptron Artificial Neuron FAQs

Q1. What is a Perceptron?

Ans: A perceptron is a foundational unit in a neural network consisting of a single layer of output nodes connected to several input networks using a non-linear function to determine or predict the output using the weighted connections and a bias.

Q2. Who invented the Perceptron?

Ans: The Perceptron was invented by Frank Rosenblatt in the 1950s. It is one of the earliest models of artificial neural networks, forming the foundation of neural networks, deep learning, and advanced artificial intelligence.

Q3. What are the types of perceptrons?

Ans: There are two major types of Perceptron, namely single-layer perceptron and Multi-layer Perceptron.

Q4. What are the weights in Perceptron?

Ans: The weights in the Perceptron signify the strength of each input node. It represents the effect of each node in the Perceptron.