Machine learning terminology: There are many terms used in machine learning which is not known or unfamiliar to a group of people. Especially if you are someone from a non-tech background or someone who has not interacted with advanced artificial intelligence and machine learning lately, then this terminology guide can be helpful.

As machine learning is an important part of today’s tech evolution and hence news about it is always around the corner. Knowing these frequently used terms in Machine learning can be helpful in interpreting various processes in ML. Here, let us learn about some of the common machine learning terminology.

What Is Machine Learning?

Machine Learning is a method of teaching machines to learn things and improve predictions and efficiencies on their own after being trained with relevant data. With machine learning predictions, automation becomes easy.

- Machine Learning can be used to uncover critical patterns, make predictions, and enable personalization.

- Machine learning models are systems or applications that can learn from data to solve problems and get better from time to time. It is crucial in areas like fraud detection, scientific research, healthcare, and more.

- It can learn patterns and make predictions from training on a set of data, and then produce results based on the previously trained data.

Also, being familiar with machine learning terminology can help you understand concepts related to machine learning easily.

Read More: How Machine Learning Applications Helpful in Healthcare 2025

Machine Learning Terminologies: Frequently Used Terms In ML

Let us check some of the important machine learning terminologies used in machine learning.

1. Relationships In Machine Learning

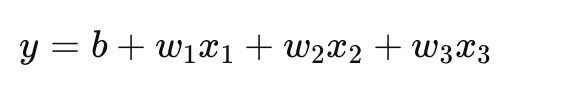

You might have come across this term when reading about machine learning concepts or topics. Relationship is a machine learning terminology that is used to make predictions, understand patterns, and more by using relationships between different inputs i,e. How input features (x) influence the outcome or target (y).

You might know about the straight line relationship in algebra. In mathematical expressions, relationships in machine learning can also be written in a similar manner.

| y = b + wx |

where,

- y is the label we have to make a prediction for

- w is the weight of the data (slope)

- x is used to represent features (input terms)

- b is used for the intercept

2. Features in ML

The feature is a machine learning terminology is used to represent the input fed to the ML model. In the straight line equation, the x variable can be termed as the features.

| y = b + wx |

where,

- y is the label we have to make a prediction for

- w is the weight of the data (slope)

- x is used to represent features (input terms)

- b is used for the intercept

Here, x represents the features in machine learning. As there are many weights in the machine learning training dataset. It can be represented as this with weights.

| y = b + w1 x1 + w2x2 + w3x3 + w4x4 |

Read More: Time and Space Complexity in Machine Learning & PW Skills

3. Labels

Labels represent the things we want to predict or calculate using a machine learning model. In the linear graph expression we took in the above terminologies, y can be considered as the label.

Here, y in the given relation is used to represent labels in machine learning.

| y = b + wx |

where,

- y is the label we have to predict for

- w is the weight of the data (slope)

- x is used to represent features (input terms)

- b is used for the intercept

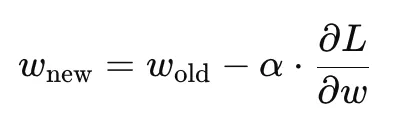

4. Training in Machine Learning

Training is a process where a machine learning model trains and learns through patterns and data by adjusting its weights. The model keeps on comparing its predictions with actual labels, calculates error, and updates weights to reduce that error.

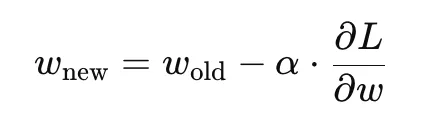

In ML, training is expressed using the weight-update rule:

where:

- w = weight

- α = learning rate

- ∂L/∂w = gradient of the loss function

- L = loss/error

For all weights, the model trains and updates its metrics repeatedly to get more effective and familiar with any changes

5. Loss Function

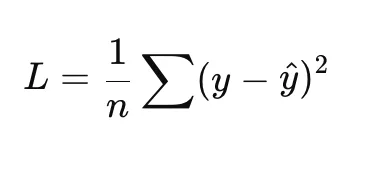

The loss function or cost function is a machine learning terminology used for comparison between the experimental value and matehmatical calculation. It is used to measure how wrong the model’s predictions are compared to the actual labels.

The goal of the machine learning loss function is to minimize the loss. When the value of L is low, it means the model is performing well. In linear regression, the common loss function is Mean Squared Error (MSE):

|

where:

- y = actual label

- y^ = predicted label

- n = number of samples

Read More: 7 Best Artificial Neural Networks for Natural Language Processing

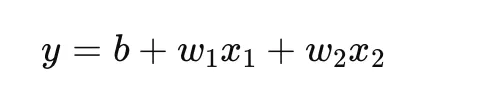

6. Prediction (ŷ — “y-hat”)

Prediction is a machine learning terminology that refers to the output generated by the model after learning from the dataset. This model is used to compare the actual model in machine learning. In linear models:

|

where:

- y^ = predicted value

- x1,x2,… = features

- w1,w2,… = weights

- b = intercept or bias

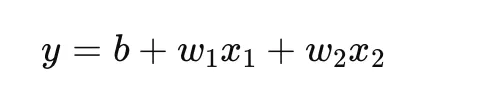

7. Bias

Bias or intercept term in machine learning represents a constant value added to the prediction equation. It helps the model in making correct predictions even when all features are zero.

In linear regression, the equation is:

8. Weights

Weights are a machine learning terminology that are used to represent the parameters the model learns during training. They represent how important each feature is in predicting the output.

Larger weights mean the input feature has a strong influence on the output when compared to normal or low weights. For multiple features:

|

9. Gradient

Gradients, also known as the slope of the loss curve, tell the model how to adjust weights to reduce the loss.

Gradient Descent updates weights in the opposite direction of the gradient. Mathematically, gradients can be represented as:

| Gradient = ∂L/∂w |

10. Epochs

An epoch is a machine learning terminology that represents one complete pass of the entire training dataset through the model. If you train for 10 epochs, the model sees the data 10 times. This is a frequently used terminology in machine learning.

11. Batch Size

When we divide the training data, they are arranged into smaller groups called batches. A batch is the number of samples processed before the model updates its weights. It is a frequently used machine learning terminology for defining the requirements for data training in ML models.

For example:

- Dataset size = 60000

- Batch size = 100

- Steps per epoch = 600

These inputs are given to the model to train it on a set or defined amount of data.

12. Activation Function

Activation functions introduce non-linearity so the model can learn complex relationships or unexpected situations. Some common examples include for activation functions include:

- ReLU:

f(x)=max(0,x) - Sigmoid:

f(x)= 1/1+e−x - Softmax:

The softmax is used to convert the output into probabilities.

Read More: Top 10 Machine Learning Projects For Beginners

13. Model Parameters

The model parameters include both weights(w) and biases (b), which the model learns during training.

For example, when taking 3 model parameter features:

| Parameters= { w1,w2,w3,b } |

14. Hyperparameters

Hyperparameters are settings chosen before training, and are not learned from data. For example:

- Learning rate

- Batch size

- Number of epochs

- Number of layers

- Number of neurons

In equations, the learning rate commonly appears as:

|

where α is a hyperparameter.

Read More: What is Perceptron | The Foundation of Artificial Neural Network [Latest 2025]

15. Overfitting

Overfitting in machine learning happens when the model memorizes training data in training instead of learning general patterns. This affects the performance of the model in the long run. Mathematically, the model performs:

| Low loss on training data but high loss on test data. |

This means poor generalization in the model. This is an important machine learning terminology when evaluating the effectiveness of a model.

16. Underfitting

Underfitting in machine learning occurs when the model is too simple and cannot learn the underlying pattern in the dataset. Loss in an underfitting model is high in both training and testing data.

For example:

| y^ ≈ constant |

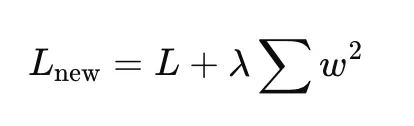

17. Regularization

Regularization is a machine learning terminology used to prevent overfitting by adding a penalty for large weights. This avoids the situation of overfitting. It is represented as Lnew

|

where:

- λ = regularization factor

- w = weights

18. Optimization Algorithm

Optimizers in machine learning are used to adjust model weights to reduce the loss in prediction output or learning. Some of the common optimizers used in ML are

- Gradient Descent

- Adam

- RMSProp

- SGD

Machine Learning Terminology FAQs

Q1. What is machine learning?

Ans: Machine Learning is a method of teaching machines to learn things and improve predictions and efficiencies on their own after being trained with relevant data.

Q2. What are weights in machine learning terminology?

Ans: Weights are a machine learning terminology that are used to represent how important each feature is in machine learning.

Q3. What is an activation function in machine learning?

Ans: An activation function in a neural network is a mathematical function used with the output of a neuron to introduce non-linearity so the model can learn complex relationships or unexpected situations.

Q4. Is overfitting good for ML models?

Ans: Overfitting in ML models is one of the biggest problems in machine learning because it disrupts the performance of your model. In this condition, your model works well on the training data but performs poor on new or unfamiliar data.