Decision trees stand out as a user-friendly machine learning technique suitable for both regression and classification tasks, they are easy to understand and implement which makes them an ideal choice for beginners.

In this article we will study Machine learning as well as the working principles of decision trees, exploring their various types, construction processes of the decision tree, and methods for evaluation and optimization of decision trees.

By the end of this article you will gain complete knowledge about decision trees and concepts related to machine learning. Please check our course to understand machine learning in detail.

What Is A Decision Tree ?

A decision tree in machine learning is a hierarchical structure used in machine learning for decision-making. It basically consists of a root node, branches, leaf nodes etc.

Decision trees are used in regression tasks providing a simple and understandable model to the user. Each decision node evaluates a feature, splitting the data into branches.

Leaf nodes represent final outcomes or predictions. The tree is built using algorithms that select the best feature to split on at each node. Techniques like pruning prevent overfitting.

Decision trees are versatile handling classification and regression tasks, making them widely used for machine learning and data analysis.

Let Us Understand Decision Tree In Machine Learning More Clearly With An Example:

Imagine you’re deciding whether to go for a walk with the help of a decision tree. The decision tree would start at the top with a question like,

“Is the weather sunny?” If yes, it might ask, “Is it windy?” If not windy, you go for a walk; if windy, you stay inside.

If the weather isn’t sunny, the decision tree might ask, “Is it raining?” If yes, stay inside; if no, you can still go for a walk. Each question leads to different paths, like branches on a tree, until you reach a final decision at the end.

Decision Tree Terminologies:

Root Node:

It is the top most node in the decision tree from where the decision making process begins, it contains the initial input of a data and leads to further decision nodes.

Decision Nodes:

Nodes resulting from the splitting of root nodes are known as decision nodes, each decision node is further splitted into another decision nodes or leaf nodes.

Leaf Nodes:

Leaf nodes or terminal nodes are those nodes after which further division is not possible, when leaf node is divided further in such a way that there is no further splitting of node possible it is then classified as leaf node.

Sub Tree:

Subtree can be classified as a small portion of a larger tree which consists of root nodes (determined after splitting of a large tree) as well as nodes formed after splitting of that node.

Pruning:

Pruning refers to the process of removing branches or nodes that do not contribute significantly to the accuracy of the tree. It helps prevent overfitting and improves tree’s ability to admit new data.

Branch:

Branch is another name for Sub tree, a subsection of an entire tree is termed as a branch or subtree.

Parent and Child Node:

A parent node is a node that is further divided into one or more nodes whereas a child node is classified as a node that is directly connected to the parent node and is not divided further into any node.

Example Of Decision Tree In Machine Learning:

Lets us understand decision tree with the help of an example given below:

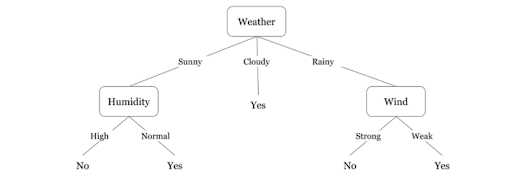

Suppose, You want to go out to play. So you will make a decision tree as shown above.

Here, Weather is a root node.

- Decision tree will first ask “what is the weather” there are three conditions weather can be: Sunny , Cloudy or Rainy.

Sunny, Cloudy and Rainy are Sub-Nodes here.

- If sunny, decision tree will further ask “is the humidity high or normal”

If high then the answer for playing outside will be “No” and if normal the answer for playing outside will be “yes”.

Similarly, If we select cloudy the answer for playing outside will be “yes”.

How Decision Tree Algorithm Works:

Let us understand the basic algorithm of the decision tree in machine learning through the simple steps given below:

- Start with the Root Node- Begin at the root node of the decision tree, which represents the entire dataset. Root nodes basically contain the base question on which the entire tree is based.

- Asking the Best Questions – Evaluate each feature in the dataset to find the best one for splitting.

- Split the Dataset- Based on the answer provided to the questions of the above node, it is further divided into several nodes.

- Creating Leaf Nodes – When the algorithm stops splitting, the final nodes are called leaf nodes. Each leaf node represents a class or a predicted value.

- Make Predictions – To make predictions for new data, follow the decision path from the root node to a leaf node.

Decision Tree Assumptions:

Decision tree assumptions are used to make the algorithm simpler and faster. They help create easy-to-understand rules. However, these assumptions may not always be true in real-world data, so we need to check how well the model works in practice.

Below are some common assumptions used while constructing a decision tree.

Binary Splits:

Binary splitting is an important concept in decision trees.

Binary splitting simplifies decision-making by breaking down complex choices into simpler yes/no decisions, leading to clear and interpretable decision paths in the decision tree.

Recursive Partitioning:

Recursive partitioning process is a common process used in decision trees where each node is divided into child nodes and this process continues until the stopping criteria meets.

This process helps in dividing large nodes into smaller sub nodes, making trees simplified and reducing complexity.

Homogeneity:

While splitting data at a node in a decision tree, the main aim is to create subsets that are as similar as possible.

Homogeneity in a decision tree ensures that the data within each node is relatively uniform, leading to clear and accurate decision-making.

Top-Down Greedy Approach:

Top-down Greedy Approach is used to construct a good and effective decision tree. In Top-Down greedy approach each split is chosen to maximize information gain and minimize impurity at the current node.

Overfitting:

Overfitting happens when a decision tree model is too complex and captures data’s noise or random fluctuations, leading to poor performance on new data. Overfitting can be prevented by simplifying the tree and by doing Data Augmentation.

Entropy:

Entropy basically helps us measure how mixed or disorganized our data is, which is useful for decision-making in machine learning algorithms like decision trees.

Let us understand Entropy more clearly with an example:

Imagine we have a basket of fruits, and we want to organize them based on their colors. We have three red apples, two green apples, and one yellow banana.

Entropy is like a measure of how mixed up or disorganized our fruits are in terms of their colors.

Low Entropy: If all the fruits are the same color (e.g., all red apples), the entropy is low because it’s very organized and easy to predict.

High Entropy: If the fruits are mixed in terms of colors (e.g., red, green, and yellow), the entropy is high because it’s more disorganized and are hard to predict.

Information Gain:

Information Gain is an important concept of Machine learning, mostly used in decision trees. Information gain is basically used to measure the effectiveness of a feature in reducing uncertainty (entropy) and improving the overall decision-making process of the Tree.

Information Gain is calculated by calculating the difference between Current Entropy of the node (before splitting) and weighted sum of entropy of all the sub nodes(after splitting)

This Concept helps decision trees to determine which feature to split on first by quantifying how much a feature reduces uncertainty in predicting outcomes using Information Gain feature leads to more effective and accurate decision-making.

When To Stop Splitting The Decision Tree:

Knowing when to stop splitting in a decision tree is crucial to prevent overfitting and ensure optimized and simplified Decision trees.

There are several ways through which will help you in stopping your Decision tree:

Maximum Depth: We can limit the depth of the tree by specifying a maximum depth. This prevents the tree from growing too deep and capturing noise in the data. This can be set by using Max_depth Parameter.

The more will be the Depth, More complex the tree will be. So it is advised to keep the depth of the tree short.

Apart from this, other common ways to stop splitting include: minimum sample split, Early stopping etc.

Learn Machine Learning with PW Skills:

Enroll in our Advanced AI Course to learn the principles, basics and knowledge of all the tools required for Machine Learning. Whether you are a beginner or an individual looking to switch your career, this Machine Learning Course course will be a right fit for you:

Providing a roadmap, and knowledge of all the tools with updated syllabus curriculum , interactive classes, practice material, regular doubt sessions and guidance from experienced teachers for your better understanding helps you to get your desired job in the field of Machine Learning.

FAQs on Decision Tree

What is the Purpose of Decision Tree ?

Decision tree is an important part of machine learning.The purpose for creating a decision tree is to make predictions and decisions analyzing past predictions, also it helps us to analyze every outcome before making any decision.

What is another name for a decision tree ?

Decision tree is also known as regression tree.

What is a decision tree in machine learning?

A decision tree is a hierarchical structure used in machine learning for decision-making. It organizes data related to decisions and outcomes into a tree-like structure

How does a decision tree work?

A decision tree starts at the root node and makes decisions at each node based on feature values, splitting the data into branches. This process continues until it reaches leaf nodes, which provide the final predictions.