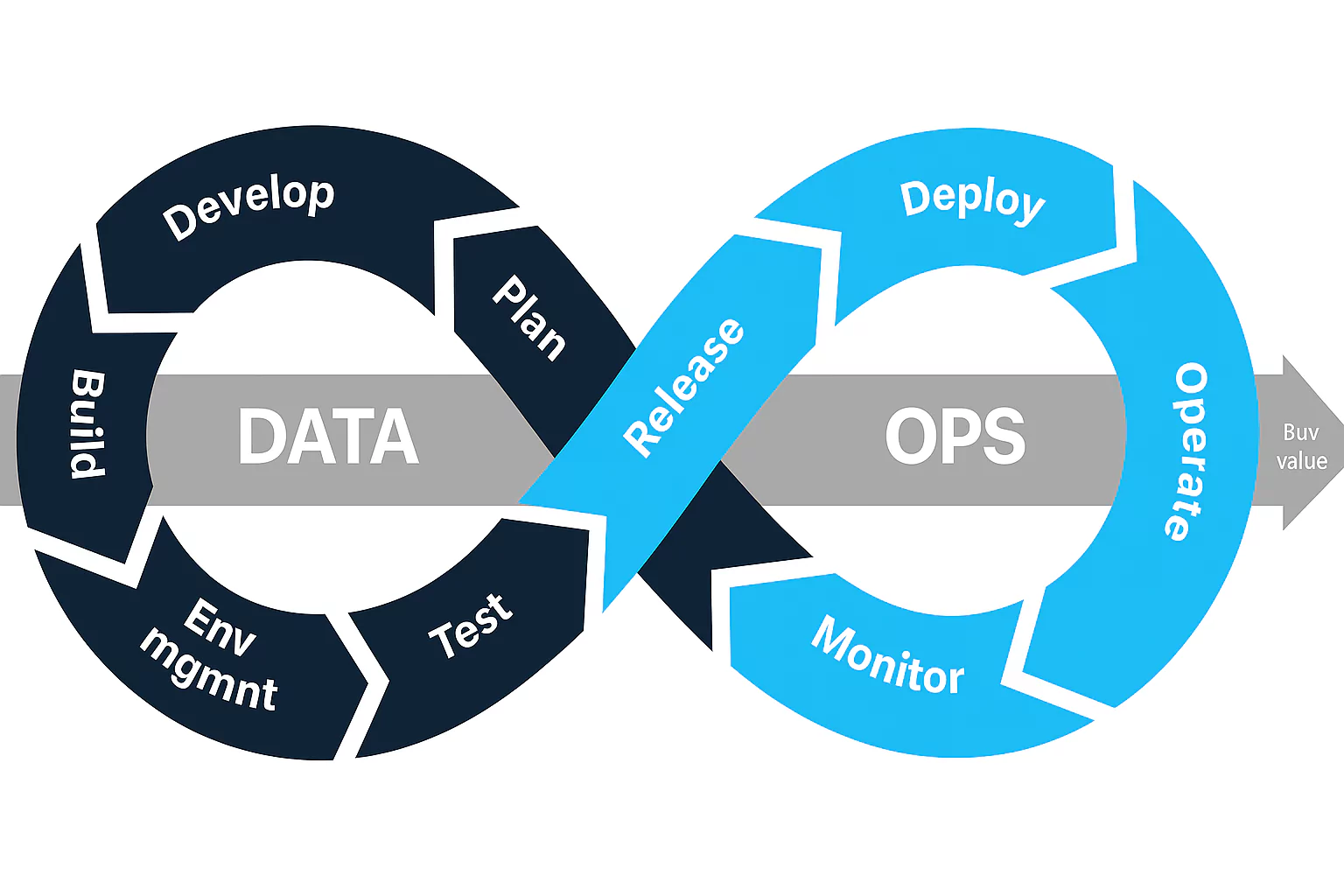

DataOps is something very similar to DevOps not only in sound resemblance but also in functions such as faster, efficient and more optimised data usage. DataOps is an efficient and evolved practice used in the modern business approach which integrates and automates data extraction and usage.

With DataOps continuous improvements in data analytics are achieved applied from data preparation to reporting. In this blog, we will learn more about DataOps approach and its usage in the data analytics field.

What Is DataOps?

DataOps is an agile methodology approach used for continuous improvement in data used for faster delivery, improved quality, and foster collaboration from data. DataOps ensures the efficiency of data analysis and management processes with automated and agile methods.

DataOps uses automation technology to perform various operations on data such as streamlining data management methods, transfer of data and automating processes to identify any errors or discontinuity in data. With dataOps we can easily automate repetitive and manual tasks to save resources and free teams for more productive workloads.

With dataOps we can ensure faster delivery with minimum errors with data pipelines which can easily handle large volumes of data at a time. It also monitors and continuously tests data pipelines to ensure that every process is running as planned.

Read More: DevOps Tutorial: Complete Explanation For Begineers

Why Is DataOps Important?

DataOps facilitate numerous data management and data analysis works and ensure its efficiency and productivity with large scale automation. DataOps is used to build and scale data products using CI/CD like automation. DataOps is just an enhanced version used by data engineers in managing data and analysis processes. Managing data manually becomes a tedious task which can easily be countered using DataOps techniques to handle data using agile methodologies.

DataOps breaks down the bottleneck between users and consumers of data and makes sure that a reliable data source is available to both. DataOps improves collaboration between teams to access and analyse data for unique information and extraction.

How Organisations Can Benefit From DataOps?

DataOps can be beneficial for organisations of different fields in many ways. Let us pin down some of the major ways here.

- With the help of dataOps businesses ensure better data quality using automated routine tasks, better observation, integration, and more.

- DataOps saves resources and time with the help of automating repetitive tasks. This free team to work on other important tasks in the analysis.

- DataOps increase the delivery speed of analytical insights with faster collaboration and shorter development life cycles at a lower cost.

- With DataOps risks are shortened with more transparency and visibility for users and their activities using the data.

- Data can be accessed in real time by team which improves accessibility features and help in making more improved decision making in shorter span of time.

There are many other important benefits of using DataOps which we will find in data management and analysis tasks.

Why is DataOps Considered a Better Approach For Data?

DataOps provides an efficient way of organising data analysis and management work with teams of experts consisting of Data engineers, data scientists, analysts, IT operations, software developers, and others based on the business objective.

DataOps help manage crucial operations such as data pipeline, quality monitoring, governance, security and operations of data across different platforms. With data quality monitoring you can get real time updates on data quality and ensure the availability of good quality data whenever required.

With DataOps we can automate extraction schedules, loading methods, data transformation, and flow of data easily. You can easily automate complex workflows and ensure that the complete data pipeline is working properly with automated dataOps. This process makes sure that the security protocols are properly working and protected from any kind of unauthorised access.

Who Should Learn DataOps?

DataOps is for everyone who is interested in working with large volumes of data as a data engineer who can manage quality and scale operations can discover a good career opportunity in DataOps. DataOps ensures clean and consistent data for training and other purposes in Machine learning models. So if you are a data scientist you can find a rewarding career in dataOps.

Also, if you are a fresher then DataOps is one of the best opportunities for you because of the next gen skills that are currently in high demand. You can easily find good opportunities if you leverage the DataOps skills properly.

![]() Join Our Devops & Cloud Computing Telegram Channel

Join Our Devops & Cloud Computing Telegram Channel

![]() Join Our Devops & Cloud Computing WhatsApp Channel

Join Our Devops & Cloud Computing WhatsApp Channel

DataOps Vs DevOps: The Resonating Differences

Both these processes sound very similar or resonating but are completely different when it comes to domain. DevOps is a method in which operations and development team in a software development life cycle come together to make the software delivery method more reliable, efficient and quick.

DataOps on the other hand is focused on breaking down the bottlenecks between the producer and consumers of data and making it more reliable.

DevOps is an important part of IT industries as it helps remove lags in software development teams and other teams making the process smooth and reliable. Similarly, the dataOps works on removing the inconsistencies or lags and deliver high quality data products for organisations in different fields.

Let us understand their differences a little more through the given table below.

| DataOps | DevOps |

| DataOps is responsible for managing and optimizing the data lifecycle | DevOps is responsible for automating and streamlining software development & deployment |

| It involves data pipelines, analytics, data integration | It involves application development, testing, and delivery |

| DataOps ensure reliable, fast, and repeatable delivery of data | DevOps ensure faster and reliable software releases |

| Some important career opportunities as a dataOps expert are ata engineers, data analysts, data scientists | Some important career opportunities as a devOps expert are Developers, QA engineers, operations team |

| Some important tools in DataOps are Apache Airflow, dbt, Talend, Informatica, DataKitchen | Some important tools in DevOps are Jenkins, Docker, Kubernetes, Git, Ansible, Terraform |

| Data pipelines, analytics dashboards, ETL processes | Applications, microservices, APIs |

| Data quality, schema validation, data integrity | Unit tests, integration tests, UI/UX tests |

| DatOps is more concerned with managing data freshness, data lineage, pipeline health | DevOps handles app uptime, performance, log monitoring |

| DataOps manage data workflows, transformation scripts | DevOps manage application code, config files |

| DataOps ensures continuous data delivery & analytics | DevOps ensures continuous Integration/Continuous Deployment (CI/CD) |

| DataOps handles access control, data masking, GDPR, compliance | DevOps handles application-level security, DevSecOps |

| The major stages in DataOps are ETL/ELT pipeline orchestration, metadata automation | The major stages in DevOps are Build, test, deploy automation |

Benefits of Using DataOps For Analysis

Some of the major benefits of using DataOps for analysis and data management purposes are listed below.

1. Ensures High Data Quality and Trust

DataOps introduces testing, monitoring, and versioning into data pipelines. Without DataOps, poor data quality leads to bad decisions, broken dashboards, and ML model failures.

2. Speeds Up Data Delivery

DataOps brings Agile and DevOps principles into data workflows. It enables faster development, testing, and deployment of data pipelines and models. It reduces the time it takes to go from raw data to business insight.

3. Improves Collaboration Across Teams

DataOps down silos between data engineers, scientists, analysts, and business teams. Everyone works with a shared view of data flows, definitions, and responsibilities. It encourages feedback and iteration, improving overall project outcomes.

4. Enables Scalability and Automation

DataOps enables data integration, testing, deployment, and monitoring. It supports scaling pipelines and ML workflows across larger datasets and teams. It reduces manual errors, improves reliability.

6. Boosts Career Opportunities

DataOps roles such as Data Engineer, ML Engineer, and Analytics Engineer often require DataOps knowledge.

7. Reduces Firefighting and Manual Fixes

With proper testing and monitoring, you can detect broken data pipelines early. It prevents time-consuming efforts to fix downstream problems after a release. DataOps makes the data systems more predictable and resilient.

The Complete DataOps LifeCycle

The DataOps follows a series of stage for completion of data management and analysis using different technologies working alongside a team and stakeholders working together to create reliable data in the organisation.

1. Planning

This is the starting phase of the DataOps which requires teams to plan the complete roadmap, align KPIs, SLIs, and more to enhance the quality of data and increase the reliability. The planning phase might include research, filtering, sorting out specific data for the complete process.

In this phase collaboration with the data, product, engineering and business teams take place with clear business goals and data expectations at the end.

2. Development

The Development phase builds the data product like transformations, and data pipelines. In this phase of the DataOps lifecycle we focus on creating data models, writing transformation code, developing machine learning models using tools like Python, SQL, Spark, Pandas, and more. The whole objective is to convert the raw data into meaningful reusable data insights.

3. Integration

The integration phase deals with connecting data components into the overall data architecture. It generally focuses on integrating with orchestration tools and ensuring data components work together in a flow. This phase is much important for a scheduled and manageable jobs.

4. Testing

In this phase the team validates the quality of the data and pipeline integrity using various tests such as unit tests, integration tests, and other data validation techniques. Usually there are unwanted anomalies in data such as nulls, duplicates, violations, schema drift, and more which are not required and must be removed from the data.

5. Release & Deployment

After successful testing the data pipeline is further moved to testing environments where tests are done to know the exact running of the data in condition. The deployment phase comes when the output is completely reliable and of high quality.

This deployment phase pushes the data pipelines into the final or production stage where automation is implemented using CI/CD pipelines. This enables fast, repeatable and safe deployments.

Also Read:

- What Is Cloud Native? Components, Benefits & Examples

- What Is An API Gateway? An Effective 8 Steps Guide

- What is Cloud Security? A 12 Steps Complete Guide

- What is Infrastructure as Code (IaC)? & The 12 Steps Ultimate Guide

Get Started with DevOps and Cloud Computing

Discover a wide range of career opportunities in DevOps and Cloud Computing with the PW Skills DevOps and Cloud Computing Course and master in-demand tools like Ansible, Kubernetes, Jenkins, and more with our interactive coursework, dedicated mentors and more.

Get real-world projects, practice exercises, module assignments to help you strengthen everything you learnt throughout the course. Hurry! Enroll in this course and start your learning journey. Become a certified devOps engineer with pwskills.com.