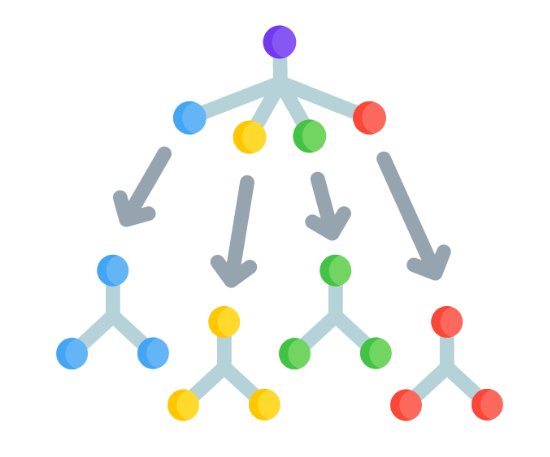

Imagine standing in front of a group of experts, each offering their unique perspective to solve a problem. Instead of relying on just one opinion, you decide to hear them all and make a decision based on their collective wisdom. This is, in essence, how the Random Forest Algorithm operates in the world of machine learning. It is not just an algorithm rather it is a strategy that leverages the power of collaboration to achieve reliable and accurate predictions.

In this blog, we will be going through the working of Random Forest, exploring why it is one of the most trusted algorithms for both classification and regression tasks. From its intuitive design to its impressive ability to handle complex datasets, Random Forest has become a favorite tool for data scientists and machine learning enthusiasts alike.

What is the Random Forest Algorithm?

Random Forest Algorithm is a supervised learning algorithm used for classification and regression tasks. It is based on ensemble learning, where multiple models are combined to produce a more accurate and stable prediction.

The Random Forest Algorithm is popular because it is easy to use, highly accurate, and works in most situations. It is like a team of experts (trees) making decisions together, ensuring the result is reliable and trustworthy.

The algorithm builds multiple decision trees during training and merges their outputs (either through voting for classification or averaging for regression) to make the final prediction. This approach reduces overfitting, increases accuracy, and handles large datasets with high dimensionality well.

Features of Random Forest Algorithm

Some of the major characteristics of the Random Forest algorithm include:

- It combines multiple decision trees to improve overall accuracy and stability.

- It uses bootstrap sampling and random feature selection to ensure diversity in trees.

- It can work well even when some data points or features are missing.

- The Random Forest is scalable as it works well on large datasets with many features.

- The Random Forest is versatile as it can handle both classification and regression tasks

- The Random Forest is robust as it is resistant to overfitting, especially with enough trees

- It works with categorical and numerical data, as it can handle a mix of different types of data without requiring extensive programming.

How does the Random Forest Algorithm Work?

- Create Multiple Subsets of Data: Random Forest picks random samples from the dataset, allowing some data points to repeat (known as “bootstrap sampling”). You can take data from Git, Kaggle, and other websites for FREE.

- Build Decision Trees: For each subset, a decision tree is grown. However, at every split in the tree, only a random selection of features is considered to find the best split.

- Combine Predictions: Once all the trees are built, now for classification, each tree votes for a class, and the majority vote is the final prediction. For regression, predictions from all the trees are averaged to get the final result.

This process makes the Random Forest algorithm robust and less prone to errors caused by noisy data or overfitting.

When Should You Use Random Forest?

The Random Forest algorithm is not an algorithm that can be used anywhere. There are a few instances that can use the Random Forest algorithm; these instances include:

- When you have a dataset with a mix of categorical and numerical features.

- When feature importance is critical for your analysis.

- When overfitting is a concern, you still want to use a tree-based model.

- When you need a reliable and robust model that does not require extensive preprocessing.

How to Implement Random Forest Algorithm in Python?

The Random Forest Algorithm method is used for classification and regression tasks in machine learning. You can start by creating a decision tree during training and then presenting the data having the majority vote.

1. Import Required Libraries

Let us import some of the important Python libraries first, such as pandas and various subdivisions of sklearn.

| import pandas as pd

from sklearn.datasets import load_iris from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestClassifier from sklearn.metrics import accuracy_score, classification_report |

2. Load the Datasets

The second major step in implementing Random forest is loading the dataset using pandas dataframe. You can display the first few rows by using data.head().

| # Load the Iris dataset

iris = load_iris() # Create a DataFrame data = pd.DataFrame(data=iris.data, columns=iris.feature_names) data[‘target’] = iris.target # Display the first few rows print(data.head()) |

3. Split the Dataset

Split your data into two parts i,e. Target and features.

| # Split the data into features (X) and target (y)

X = data.drop(columns=[‘target’]) y = data[‘target’] # Train-test split (80% training, 20% testing) X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) |

4. Train the Random Forest Model

Now, train your random first model in this step using the appropriate classifiers.

| # Initialize the Random Forest Classifier

rf_classifier = RandomForestClassifier(n_estimators=100, random_state=42) # Train the model rf_classifier.fit(X_train, y_train) |

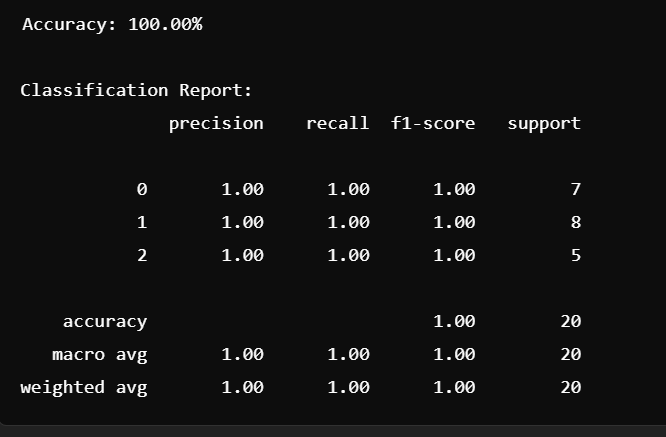

Evaluate Accuracy and Classification Reports

Now, you can easily calculate and display the results of accuracy and classification.

| # Initialize the Random Forest Classifier

rf_classifier = RandomForestClassifier(n_estimators=100, random_state=42) # Train the model rf_classifier.fit(X_train, y_train) |

Output

Advantages of Random Forest Algorithm

There are various advantages of the Random Forest Algorithm, which include:

- High Accuracy: By aggregating multiple decision trees, Random Forest achieves high accuracy and robustness, especially with large datasets.

- Handles Missing Data: It can maintain accuracy even with a significant amount of missing data.

- Feature importance: It provides an intuitive measure of the importance of each feature, which can guide feature selection.

- Resistant to Overfitting: Unlike individual decision trees, Random Forest mitigates overfitting by averaging multiple trees.

- Works well with High-Dimensional Data: It can handle datasets with a large number of features without significant performance loss.

- Handles categorical and numerical features: Random Forest can seamlessly deal with datasets containing both types of features.

Disadvantages of Random Forest Algorithm

There are a few limitations of the Random Forest Algorithm, including:

- Computationally Expensive: Building multiple decision trees requires significant computational resources, especially with large datasets.

- Memory Intensive: Storing a large number of decision trees can consume a lot of memory.

- Not Easily Interpretable: Unlike a single decision tree, Random Forest doesn’t provide clear decision rules, making it a black-box model.

- Can Overfit with No Tuning: While it resists overfitting compared to a single decision tree, improper parameter tuning (for instance, too many trees or excessive tree depth) can still lead to overfitting.

Uses of Random Forest Algorithm in Machine Learning

Some of the major uses of the Random Forest algorithm in machine learning include classification, regression, and feature importance tasks. Random Forest is very versatile and is used in a wide range of applications:

Classification Tasks

- Spam Email Detection

- Customer Segmentation

- Medical Diagnosis

Regression Tasks

- Predicting House prices

- Stock Market forecasting

- Weather Predictions

Feature Importance

- Random Forest algorithm provides a measure of feature importance, which can help in identifying the most relevant variables in the dataset

Outlier Detection

- It can be used to detect anomalies or outlines in the dataset.

Learn Data Science and Generative AI With PW Skills

Become a master in Data Science and Machine Learning with the PW Skills Data Science with Generative AI Course. Build real world capstone projects based on the concepts covered in the machine learning, Python, and artificial intelligence modules.

Experts at PW Skills will guide you through industry oriented curriculum and prepare you for interview opportunities. Delve into instructor-led live sessions and leverage Get dedicated support with this Python Machine learning course and become job-ready only at pwskills.com

Random Forest Algorithm In Machine Learning FAQs

Q1. What is a Random Forest Algorithm in Machine learning?

Ans. Random Forest Algorithm is a supervised learning algorithm used for classification and regression tasks. It is based on ensemble learning, where multiple models are combined to produce a more accurate and stable prediction.

Q2. What are the uses of the Random Forest Algorithm?

Ans. Some of the major uses of the Random Forest algorithm in machine learning include classification, regression, and feature importance tasks.

Q3. What are the limitations of the Random Forest Algorithm?

Ans. Some of the major limitations of the Random Forest Algorithm is that it is computationally expensive, memory intensive, and is not easily interpretable.