Normalization vs Standardization: Data sets come in all varieties of the different coordination of types: scales, units, areas, domains. All of this data has to be aligned in the right manner and have to be normalized or standardized to make any sense for working with algorithms.

This is the divergence and unmistakable nature of the two. Even siblings are confused, and Normalization vs Standardization is used interchangeably at times, but it’s not the same. Knowing when to apply Normalization vs Standardization is the line of distinction between constructing a robust predictive model or scratching one’s head over the wrong outputs.

Here in this Normalization vs Standardization guide, it will be broken down, with a touch of coding magic so that theory might tie to real jobs. Also if you are a student taking the first steps in your machine learning project or a professional trying to cope-up with some large amounts of enterprise data, clarity will be found within.

What is Data Normalization?

Normalizing the data is like resizing all the photos to fit in your memory book. Rescaling techniques such as data normalization scale the values within a range—like, the range (normally) of 0–1 or -1 to 1.

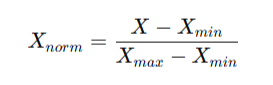

The formula for Min-Max normalization is defined as-

In simpler terms, if something is between 10 and 1000 and another is just between 0 and 1, the algorithm might weigh more heavily toward numbers in the former set due to their sheer variety. Normalization does exactly this—equalize the height of these peaks- that is, bring them all to the same scale.

![]() Join Our Data Analytics Telegram Channel

Join Our Data Analytics Telegram Channel

![]() Join Our Data Analytics WhatsApp Channel

Join Our Data Analytics WhatsApp Channel

Real-world example – Normalization vs Standardization

So imagine a test at school where some students get their scores out of 100 and others get out of 10. This is technically a normalization step, so you can now compare them directly.

Normalization takes on multiple functions, some of which look very alike. The biggest and most common include:

Min-Max Normalization

This scales all of the data within a fixed range—most likely the eigenvalue of 0–1. This is especially useful when the scatterplots have walls of which you know the highest and lowest values.

Z-Linear Normalization (also known as Standardization)

Oh, this field—sometimes referred to exactly as “Normalization,” hence putting the confusion forth—joins the ranks where data categorize into their outcome based on their mean and standard deviation.

Decimal Normalization

Groups the decimal points of the values until they lie in a known predetermined range.

Vector Normalization

Used with problems of text mining with values being proportioned into division by the length of the vector.

The Basics of Data Standardization: Normalization vs Standardization

When normalization conceptually refers to resizing, one has to consider standardization as re-centering. Instead of scaling, standardization moves the data to have:

Mean = 0

Standard deviation = 1.

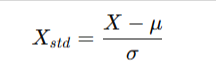

The formula for Standardization is given as:

where μ is the mean, and σ is the standard deviation.

Normalization vs Standardization Key Differences

| Feature | Normalization | Standardization |

| Definition | Rescales values into a fixed range (e.g., 0–1) | Rescales values to have mean 0 and std 1 |

| Best used when | You know the min & max values, or when data is bounded | Data has outliers or unknown bounds |

| Formula | (X – min) / (max – min) | (X – μ) / σ |

| Applications | Neural networks, image processing | Linear regression, clustering, PCA |

| Effect on outliers | Sensitive to outliers | More robust to outliers |

| Interpretation | Relative to range | Relative to mean and spread |

Why Learn Normalization vs Standardization?

Because machine learning models are picky eaters. They don’t like unbalanced meals. Algorithms like KNN, SVM, and Neural Networks are distance-based—they care about scale. Feed them unscaled data, and they’ll get indigestion.

By mastering Normalization vs Standardization, you unlock:

- Cleaner preprocessing pipelines

- More accurate predictions

- Models that generalize better in the real world

Coding Examples: Normalization vs Standardization in Python

Let’s dive in to explore:

import numpy as np

from sklearn.preprocessing import MinMaxScaler, StandardScaler

# Sample data

data = np.array([[10, 200],

[20, 400],

[30, 600],

[40, 800]])

# Normalization (0 to 1)

norm_scaler = MinMaxScaler()

normalized = norm_scaler.fit_transform(data)

# Standardization (mean=0, std=1)

std_scaler = StandardScaler()

standardized = std_scaler.fit_transform(data)

print(“Normalized Data:\n”, normalized)

print(“\nStandardized Data:\n”, standardized)

Run this, and you’ll see how the same dataset transforms differently under the two methods.

Real-World Applications: Normalization vs Standardization

Normalization in action

- Image processing: Pixel values (0-255) are normalized to 0-1 for neural networks.

- Financial data: Stock price fluctuations normalized before feeding into forecasting models.

Standardization in action

- Medical studies: Standardizing lab values across populations.

- Clustering (K-Means): Ensures fair distance calculations between features like income and age.

Advantages of Normalization

Normalization advantages include:

- Easy to implement

- Works well when data has known boundaries

- Speed up convergence in gradient descent

Disadvantages of Normalization

Normalozation also have several disadvantages that are mentioned below:

- Sensitive to outliers

- Not suitable if future data goes beyond current min/max

Advantages and Disadvantages of Standardization

Standardization comes up with several pros and cons.

Advantages:

- Handles outliers better

- Widely applicable in machine learning models

- Works well with normally distributed data

Disadvantages:

Doesn’t guarantee bounded range

Interpretation of the transformed values may be less intuitive.

Career Prospects and Pay Scales Insights

Data preparation is the biggest and most important part of a Data Analyst, Data Scientist, and Machine Learning Engineer. Nothing pr etty AI could be built without it.

- Data analyst: 4 to 8 LPA (India), $65,000 to $90,000 (USA)

- Data scientist: 8 to 20 LPA (India), $90,000 to 150,000 (USA)

- ML engineer: 12 to 25 LPA (India), $100,000 to 160,000 (USA)

Understanding Normalization vs Standardization is one of those little niggles that give an edge in interviews and projects.

Common Mistakes by Beginners

- Confusing with that terminology; calling standardization “normalization” (and vice versa).

- Scaling the test data separately: Always fit scalers on training data, then apply to test data.

- Forget the nature of the algorithm: Not every model requires scaling (e.g., decision trees do not).

- Blind scaling: Using normalization when data has heavy outliers – it is not a good idea.

Stepwise Learning Path: Roadmap to Learn

- Learn the math basics: Mean, variance, standard deviation.

- Play with small datasets: Using sklearn scalers in Python.

- Test algorithms: KNN-without scaling to check the difference with scaling.

- Explore real datasets: Kaggle datasets in finance, healthcare, etc.

- End-to-end projects: Include scaling in preprocessing pipelines.

Mini Projects You May Try

- House Price Prediction: Normalize features like square footage, standardize features like price.

- Iris Dataset Classification: Comparison of accuracy; scaling applied vs. no scaling.

- Customer Segmentation: KMeans clustering with standard inputs.

Normalization vs Standardization Comparison with Other Techniques

Normalization vs Standardization is certainly the most commonly heard. But other tricks exist as well:

- Robust Scaling: Uses median and interquartile range, great for outliers.

- Log Transformation: Reduces skewness in data distributions.

Troubleshooting Tips

- Visualize data distribution before and after scaling.

- All your preprocessing steps must go through scikit-learn pipelines to prevent data leakage.

- Scaling is really case-specific. There is no one rule that fits all.

The Scope of Scaling in Machine Learning in the Future

With the introduction of AutoML (Automated Machine Learning), a lot of preprocessing, along with scaling, is done automatically. However, a true understanding of the “why” behind them will always put humans ahead in the game.

Get Smart Learning with PW Skills Data Analytics Course

Want to go beyond just theory? The PW Skills Data Analytics course gives you hands-on, experience-based learning to data preprocessing methods, visualization skills, and machine learning techniques. From Normalization vs Standardization to the most valuable analytics, you are preparing for the job market with real-life projects. Whether you’re looking to change careers or more upward mobility in your current role, this course will make you industry-ready.

Depends. Normalization is required when data has fixed bounds, but standardization is used when measuring the outliers or unknown bounds. No. Models based on tree structures need neither scaling nor manipulation, whereas models based on distance require such scaling. You don't need to do this often. Generally, you have to choose one according to the data set and the needs of the model. If you have very well-defined min and max boundaries, go for Min-Max. If the data show normal distribution but also have outliers, go with Z-Score.FAQs

Which is better: Normalization or Standardization?

Does every model in machine learning need scaling?

Can I normalize and standardize the data together?

How will I decide between Min-Max and Z-Score scaling?