In the world of machine learning, one of the first algorithms you would be going through is k nearest neighbor. The algorithm in itself is simple, intuitive, and surprisingly effective. While neural networks turn out to be more complex, machine learning algorithms like k nearest neighbor act almost like humans: they glance around for nearby points and make a decision.

In this edition of 2025, we will delve into the KNN- What is it, how does it work, why is it still relevant in today’s AI world, and how can you use it with real-life examples, formulas, and Python code?

K Nearest Neighbor Algorithm

K nearest neighbor is a supervised learning algorithm that is said to be a lazy algorithm. Why? Because it does not learn during the training phase but instead waits for observation of the new data point to then decide about the point by comparing it with existing one.

Suppose you have recently moved into a city; if those around you want to determine what you might fancy, be it a coffee shop, a gym, or a movie genre, they check what your closest neighbor likes. This is what the k nearest neighbor algorithm does in machine learning.

How K Nearest Neighbor Works

To answer how does k nearest neighbor work, let’s break it down step by step:

- Choose K – Decide how many neighbors to consider (k).

- Calculate Distance – Find how close existing data points are to the new one.

- Select Neighbors – Pick the k closest data points.

- Vote or Average – For classification, take a majority vote; for regression, take the average.

Example:

Say you want to predict if a student will pass an exam. Look at the 5 students most similar (k=5). If 4 out of 5 passed, chances are the new student will pass too.

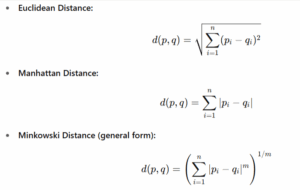

Mathematical Foundations of K Nearest Neighbor

The distance calculation is the backbone of the k nearest neighbor algorithm. The most widely applied formulas are:

The choice of the right metric can build or break the accuracy in k nearest neighbor.

Choosing the Right Value of K in K Nearest Neighbor

The foremost crucial decision in k nearest neighbor algorithm is selecting k:

- Small k (like k=1 or 3): Very sensitive; may misclassify due to noise.

- Large k (like k=15 or 20): Smoother, but may ignore finer details.

And remember the practical tip: start with odd numbers to avoid ties, cross-validate it, and find the sweet value.

K Nearest Neighbor Example in Real Life

Triple K Nearest Neighbors in Simple Terms:

- Health care: Predict if a tumor is malignant or benign by checking for patient data similar to that of the new patient.

- E-Commerce: Recommending products to a shopper by comparing them with buyers who have purchased similar items.

- Social Media: Suggesting friends on Facebook or LinkedIn by looking at mutual connections.

Python Implementation of K Nearest Neighbor

Students often wonder how to implement the k nearest neighbor algorithm in machine learning. Here is a short Python snippet:

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

# Load dataset

iris = load_iris()

X, y = iris.data, iris.target

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Initialize KNN

knn = KNeighborsClassifier(n_neighbors=5)

# Train the model

knn.fit(X_train, y_train)

# Predict

y_pred = knn.predict(X_test)

# Accuracy

print(“Accuracy:”, accuracy_score(y_test, y_pred))

This is one of the most common k nearest neighbor examples taught to beginners.

![]() Join Our Data Science Telegram Channel

Join Our Data Science Telegram Channel

![]() Join Our Data Science WhatsApp Channel

Join Our Data Science WhatsApp Channel

Why do students and professionals still love the k nearest neighbor algorithm?

- Easy to implement and interpret.

- Works for both classification and regression.

- Makes no assumptions regarding data distribution.

Demerits of K Nearest Algorithm

K nearest neighbor algorithm in machine learning does have some shortcomings, even though it maybe my simpletons:

- In huge datasets, scanning needs to be done for each prediction (slow).

- Sensitive to irrelevant features.

- Struggles with high-dimensional categorization.

Applications of K Nearest

The KNN algorithm powers a lot of applications in the real world:

- Banking: Fraud Detection.

- Medicine: Predicting Diseases.

- Retail: Customer Segmentation.

- Computer Vision: Face Recognition Systems.

Comparison of K Nearest to Other Algorithms

A quick comparison:

| Algorithm | Training Time | Prediction Time | Flexibility | Use Case |

| KNN | Fast | Slow | High | Baseline models, small data |

| Decision Trees | Medium | Fast | Medium | Classification tasks |

| SVM | Slow | Medium | High | Complex boundaries |

| Logistic Regression | Fast | Fast | Low | Binary classification |

This shows areas where the algorithm shines and where it lags far behind.

K Nearest Neighbor Algorithm in 2025 and Beyond: The Future

Even in this commercial deep learning age, the algorithm in machine learning is still very much in play:

- Distance computations with optimal libraries grow faster.

- Hybrid approaches combining KNN with other models for increased accuracy.

- Because of its simplicity, it is being widely used at the edge computing and IoT devices.

Also Read:

- What is K Means Clustering? An Effective Guide with Examples (2025)

- What is Gradient Descent? A Beginner’s Guide to the Learning Algorithm

- Master Hypothesis Testing – From Basics to Real-World Scenarios

- What is Data Ingestion? A 12 Step Beginner-Friendly Guide to Mastering the Basics

Learn Data Science with PW Skills

If you want to not just read but actually implement algorithms, PW Skills offers a Data Science Course designed for students and working professionals. After the hands-on projects, mentorship, and real-life use cases, you will have no limits on K and will be confident to perform in your career using machine learning.

No, but before deep learning methods are applied, it is often used as a baseline model. Yes, when the text is converted into numerical vectors through TF-IDF or word embeddings. Because it doesn't build a model while training; it only retains data. Not always, since it has to fight its way through the curse of dimensionality. Some form of feature reduction is often done.K Nearest Neighbor FAQs

Is the k nearest neighbor algorithm used in deep learning?

Is k nearest neighbor applicable when dealing with text data?

Why is k nearest neighbor considered a lazy learner?

Does k nearest neighbor work for high dimensional data?